There are a lot of books about artificial intelligence. The interlibrary site Worldcat lists over 36,000. Amazon claims to have over 20,000 for sale. Many contain histrionic titles, such as Life 3.0: Being Human in the Age of Artificial Intelligence, You Look Like a Thing and I Love You: How Artificial Intelligence Works, Why It’s Making the World a Weirder Place, and especially The Age of Spiritual Machines: When Computers Exceed Human Intelligence. Melanie Mitchell’s new book is more modestly titled but it is, in my opinion after surveying much of this literature, the most intelligent book on the subject. Mitchell is Professor of Computer Science at Portland State University as well as External Professor and Co-Chair of the Science Board at the Santa Fe Institute. And, unlike most active practitioners in the field, her evaluation of the current state of AI and its prospects is measured, cautious, and often skeptical.

The book begins with an introduction (“Prologue: Terrified”), a personal story of how she became involved with AI, inspired by Douglas Hofstadter’s Gödel, Escher, Bach: An Eternal Golden Braid. Through a mixture of luck, audacity, and persistence, Mitchell first became Hofstadter’s research student and then a doctoral student under him. Decades later, in 2014, at a Google conference she attended with him, she learned that Hofstadter was upset that one AI program has defeated the world Chess champion and another has generated a music “composition” indistinguishable from (even judged better than) a genuine composition by Chopin. Hofstader’s concerns inspired her to write about the pursuit of human-level AI (and beyond).

The book is divided into five parts: Background; Looking and Learning; Learning to Play; Artificial Intelligence Meets Natural Language; and The Barrier of Meaning. Although Mitchell states up front that the book isn’t intended to be a general survey or history of AI, she still manages to tell enough of its history—especially of its hubristic inauguration in 1956 by John McCarthy, Marvin Minsky, Allen Newell, and Herbert Simon—to put today’s enthusiastic optimism in perspective.

As Mitchell explains, one of the first branches in the pursuit of AI was artificial neural nets (ANN)—the foundation of today’s deep learning algorithms. She provides an example of such an ANN, the “perceptron” designed to “learn” how to recognize hand-lettered digits. The illustrative grid is 18×18, and each square has four shades: white, light gray, dark gray, and black. Curiously, she doesn’t mention the fundamental problem of even such a relatively modest ANN: the number of different possible different inputs in this case is 2 to the 326th power (2326), or:

136,703,170,298,938,245,273,281,389,194,851,335,334,573,089,430,825,777,276,610,662,900,622,062,449,960,995,201,469,573,563,940,864

Such a program is effectively untestable. (This is true for the even more limited 8×8 binary grid, which she discusses a little later in a different context. In that case, the number of possible cases is only 2 to the 64th power (264), or 18,446,744,073,709,551,616.)

This problem will come back to haunt today’s astonishingly successful deep learning programs, as Mitchell demonstrates in some detail later in the book.

Mitchell briefly discusses the famous Turing Test, which the seminal mathematician Alan Turing proposed in 1950 (calling it the Imitation Game) as a way to operationalize the troublesome concept of intelligence. This is equivalent to saying that NASA would have successfully landed on the moon if only it had done a better job of faking it, as some conspiracy theorists insist they tried to do. Mitchell points out how easily the Turing Test has been “passed” with naive testers using trivial chatbots, but herself doesn’t pass judgment on the validity of the test itself.

In Part II, Mitchell addresses the difficulty of image recognition and machine learning—particularly deep learning. She begins with a black-and-white version of this photograph:

Figure 1: A richly detailed story-telling photograph. AI image recognition programs have difficulty interpreting it beyond recognizing that it contains a dog. (Credit: U.S. Air Force photo by Airman 1st Class Erica Crossen)

Although there are a few recognizable entities in the photograph—a dog, a woman wearing a camouflage suit, a bouquet, a laptop, a Welcome Home! Balloon, a suitcase, an American flag, and so on—this photograph tells a story, which is why it was it deemed one of the 50 best military photos of 2015. No AI program in existence can even begin to make sense of it. For example, Microsoft’s CaptionBot, which makes the claim that “I can understand the content of any photograph and I’ll try to describe it as well as any human,” fails repeatedly with this photograph, giving the excuse “I seem to be under the weather right now. Try again later.” When I performed a Google image search on it, Google found only the dog. Having decided that, Google provided a huge number of “very similar” images, in which the only element in common was a dog.

This is just the introduction to Mitchell’s deeper discussion of image recognition, how deep learning programs work, and the fragility of their apparent success. Apparently well-known in the AI community but little known outside of it (and to me), you can take a recognizable image and turn it to an apparently identical one that will be identified with great confidence by AI programs as whatever you like, including something entirely different. Mitchell gives several examples, including this pair:

Figure 2: A recognizable image can be imperceptibly altered so that it will be misidentified by image recognition software. (Left): Original: photograph correctly identified as a school bus. (Center): Differences to be applied to the original image to cause misidentification. (Right): Photograph apparently of a school bus incorrectly identified as an ostrich. (See “Intriguing properties of neural networks” for discussion.)

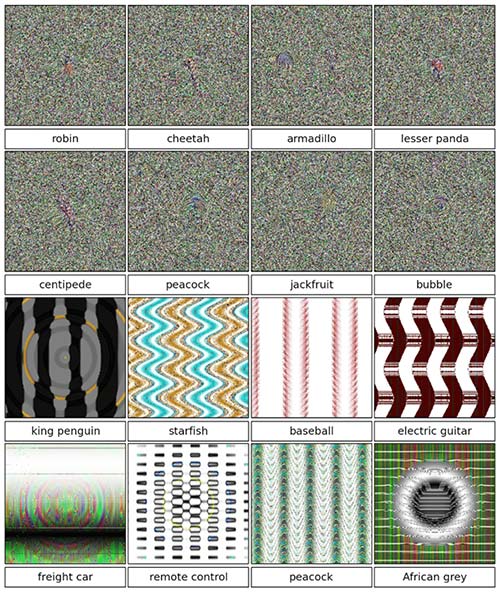

Perhaps even more stunning are the examples of TV snow and abstract geometric patterns identified (again, with great confidence) as specific entities:

Figure 3: TV snow patterns and abstract geometric patterns identified by AI as specific objects.

As Mitchell concludes, this reveals that these image recognition programs aren’t learning what we think they’re learning.

For some reason, Mitchell places the chapter entitled “On Trustworthy and Ethical AI” here in Part II (Looking and Learning) rather than in the concluding section where it more properly belongs. The 13 pages she devotes is inadequate to the subject, which is more thoroughly discussed even in Wikipedia—not to mention the hundreds of thousands of websites and even entire books.

In Part III, Learning to Play, Mitchell resumes both her enthusiasm and her mastery. Although this section is again rather short, her discussion of how robotic dogs have learned how to play soccer and computer programs have learned to play Atari, Chess, and Go is clear and informative. And, she’s appropriately skeptical of the credulous enthusiasm fostered by such successes: “It may sound strange to say, but…the lowest kindergartner in the school chess club is smarter than AlphaGo.”

It seems to me extremely unlikely that machines could ever reach the level of humans on translation, reading comprehension, and the like exclusively from online data, with essentially no real understanding of the language they process.

The boundary between Part IV, Artificial Intelligence Meets Natural Language, and Part V, The Barrier of Meaning, is arbitrary and superfluous, exactly because, as Mitchell is well aware, without meaning, language isn’t natural. But her discussion of both subjects is knowledgeable, informative, and strong, particularly on the subject of analogies, which was the subject of her doctoral thesis (published as Analogy Making As Perception: A Computer Model).

Here, too, she’s justifiably skeptical: “It seems to me extremely unlikely that machines could ever reach the level of humans on translation, reading comprehension, and the like exclusively from online data, with essentially no real understanding of the language they process. Language relies on commonsense knowledge and understanding of the world…. Does the lack of humanlike understanding…inevitably result in their being brittle, unreliable and vulnerable to attack? No one knows the answer, and this fact should give us all pause.”

In the penultimate chapter, Mitchell reiterates the point she’s been making throughout the book with a photograph that, like the soldier coming home, is beyond the comprehension of any AI program (the humor of President Obama adding pounds to the weight scale):

Figure 4: A photograph used as an example of understanding beyond the comprehension of an AI program: President Obama surreptitiously adds a few pounds as a colleague weighs himself. (Credit: Official White House photo by Pete Souza)

The final chapter, “Questions, Answers, and Speculations,” is far less speculative than Mitchell pretends: “[T]he take-home message from this book is that we humans tend to overestimate AI advances and underestimate the complexity of our own intelligence. Today’s AI is far from general intelligence, and I don’t believe that machine ‘superintelligence’ is anywhere on the horizon.”

Artificial Intelligence: A Guide for Thinking Humans is a highly readable explanation of both AI’s recent impressive successes and its longstanding (and perhaps inherent) limitations, issues, and failures. Most remarkably, unlike skeptical outsiders like myself, its author has been actively working in the field for her entire professional life.

About the Author

Peter Kassan, over the course of his long career in software, has been a programmer, a software technical writer, a manager of technical writers and programmers, and an executive at a software products company. He’s the author or co-author of several software patents. He’s been a skeptical observer of the pursuit of artificial intelligence and other matters for some time. He’s a regular contributor to Skeptic.

This article was published on December 31, 2019.

Consciousness is a mystery – perhaps free will as well. Is it possible we need to solve or to have a better understanding of such before REAL machine intelligence reaches that ” horizon?”

Not that many will notice, but 4^234 is actually 2^468 (not 2^236). Nice review!

I’ll be interested to read this book. A key tenet of philosophy — a discipline much scorned these days, but which our public discourse withers for lack of — is, as Voltaire commanded, ‘define your terms’. No one bothers to coherently define ‘intelligence’.

I suggest the sine qua non of ‘intelligence’ is awareness — individual consciousness — and intentional creativity. Consider not only our species, but others to which we usually deny the quality of consciousness. Not long ago, I was watching a young squirrel in my backyard. (If you want to watch intelligence in action, set up some bird feeders and try to devise a way to keep squirrels out!) The squirrel raced partway up a young tree — one nowhere near a feeder, not providing him access to the seed — and out onto a flimsy branch that bent and deposited him on the ground. After a moment, he raced back up, onto the same branch, and down again, Up, down. To a human, this may seem senseless to go out on the branch even once, since it didn’t help him get the food, much less repeatedly It took me a moment to realize the little guy was playing a game, like an amusement park ride.

I’m not impressed by a computer playing chess or go. I want to see a computer that is capable of getting bored and inventing a game like chess or go. I want to see a computer not only mimic the ability to compose classical music, but to want to. Show me a computer that can make a pun. Then you’ll have ‘intelligence’.