Over my years in chiropractic practice, I had cultivated referrals to and from large medical groups. From that experience, Palmer College in San Jose hired me in 1999 to meet with medical directors around the San Francisco Bay to develop observational rounds and internships for chiropractic students. In my presentations, I reported both supportive and negative studies. I thought the aggregate inconclusive evidence showed spinal manipulation (SM) was “better than nothing.” Working some of the time was better than nothing, right? I later found out my conclusion of “better than nothing” was wrong.

As a practicing chiropractor, I had unraveled some tough cases, including lifting my next-door neighbor physician off his own waiting room floor, pushing him to my office in a wheelchair, and sending him back pushing his own wheelchair within the hour. Certainly, I remember my successes more than my failures. Yet those I believed I had helped might have stayed out of emergency care long enough to recover on their own. Nonetheless, chiropractic and spinal manipulation research always failed to support and guide my clinical outcomes.

Replication Crisis

Around 2010, the Replication Crisis erupted in behavioral science. It threatened to invalidate our knowledge of psychology and medicine, caused us to question the efficacy of treatments, and shook our faith in scientific findings. Reportedly, as many as 70% of key studies in medical and psychological research did not get the same results when the experiments were repeated, casting doubt on the original results, some of which had become foundations of psychological knowledge and medical practice.1, 2 In response, behavioral scientists in many fields acknowledged the need to replicate the findings of key studies.

Though I did not specifically test for replicability in psychological and medical studies, I happened upon a loophole in spinal manipulation research that could explain the larger replication problem (or the lack thereof) in clinical science. The loophole only applies to

weak and marginal treatment, a common classification of many alternative medicines when compared against simple passage of time, attentiveness, placebos, or exercise.

My insight was based on a recent experience just before the replication crisis in which I had adapted a meta-analysis to assess why SM research was not replicable. After nearly 50 years of research on low back pain, mostly as delivered by chiropractors, nothing was consistent or even close to authoritative. For every study that supported spinal manipulation as being an effective palliative treatment for low back pain, there was another showing no effect. No specific provider or apparatus stood apart from the pack. Low back pain remained scientific terra incognita as we entered the 21st Century.

Spinal manipulation today is offered by most all chiropractors, and to a lesser degree by osteopaths, naturopaths, physical therapists, bonesetters, Chinese medicine practitioners, a few physicians, some massage therapists, and various others. Chiropractors are also the primary SM researchers. There are also many back pain devices. If you search for “back pain relief devices” on Amazon, you will see over 5000 product listings.

In short, lower back pain is a problem where any treatment works at least for some sufferers, but none work particularly well, though the rest claim to work for everyone. When you think about it, the notion that every treatment works might actually mean its opposite – that none work because non-pathological pain eventually resolves on its own.3, 4

Chiropractic and Statistical Inference

The weak methodological link that makes alternative medicine look good now and then is also the likely suspect in the replicability crisis, namely, null hypothesis significance testing (NHST). NHST is the most used and accepted tool in clinical science, but it is easily fooled by weak treatments, where control groups are almost, or even more, effective than the treatment.

NHST forces nuanced outcomes into a binary decision of accepting or rejecting the null hypothesis. When a control group proves to be as effective as the treatment, it means the treatment does not work. However, NHST might be hiding that the control group performed even better than the treatment. We do not know, because everyone is invested in the performance of the treatment, not the control group. In fact, a successful control group means, by definition, rejecting the hypothesis that the “treatment” is effective. If a control group is equally matched with treatment, the two conditions will take turns besting each other across studies. Two equally-matched horses or sailboats will almost never tie in every race. They will take turns winning, at the rate of a binomial function. A treatment will win over equally effective control groups in 50% of two-armed trials, 33% of three-armed trials, and 25% of all four-armed trials. This was found in my meta-analysis of 2014.

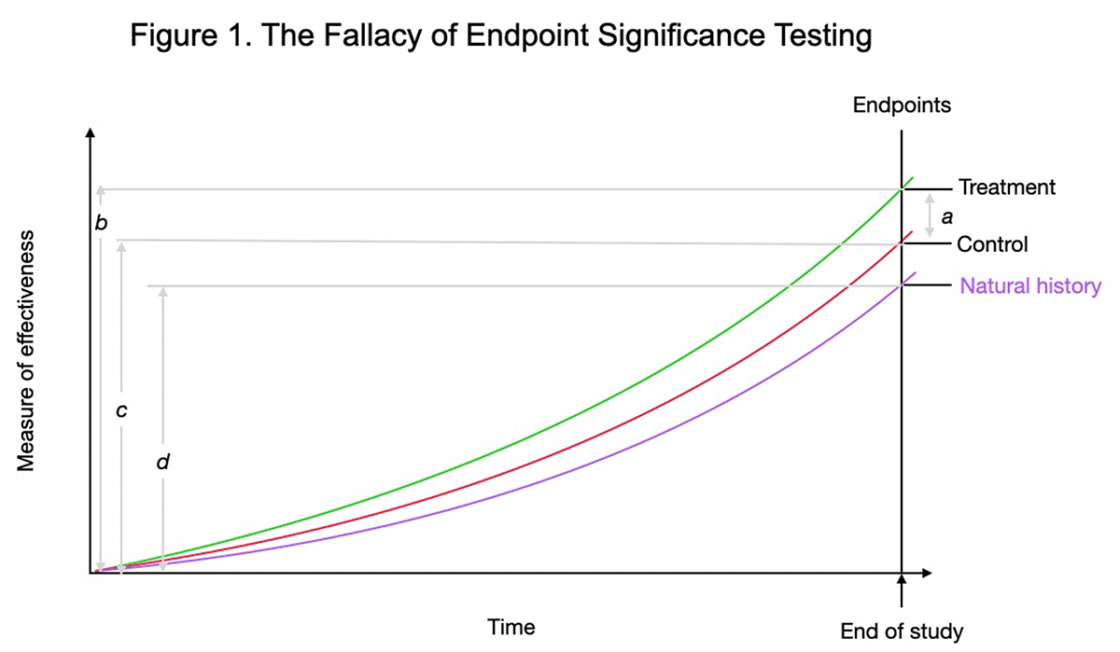

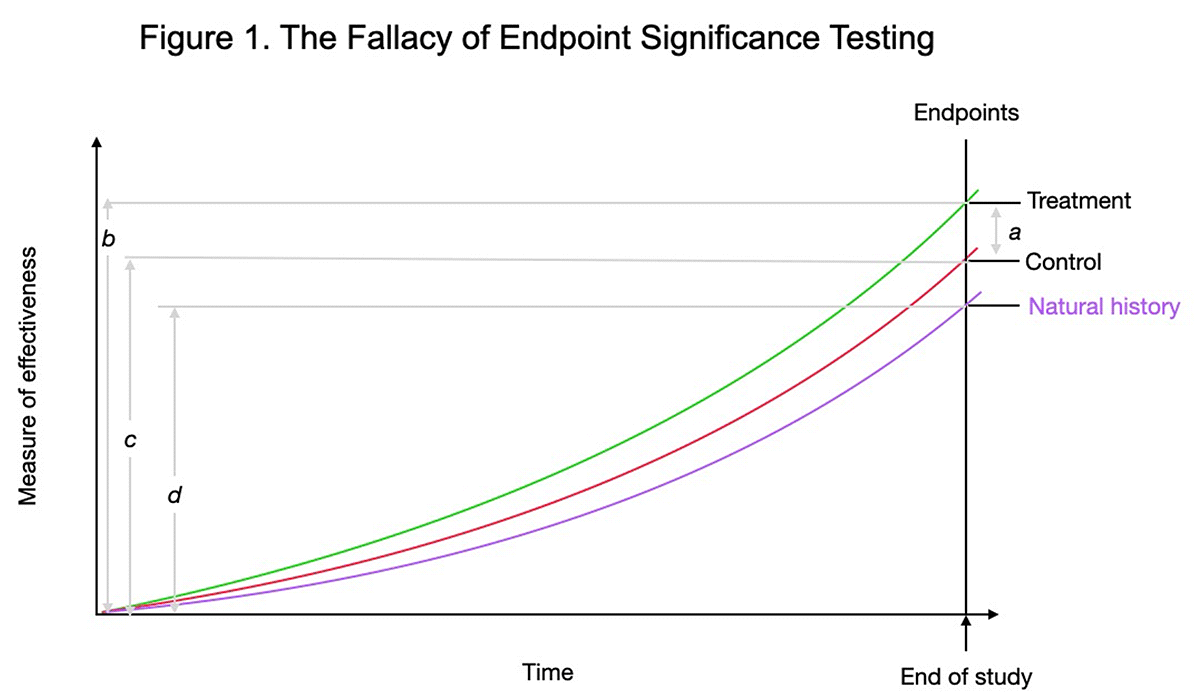

For an example of how this NHST and summary data perpetuate misunderstanding, see Figure 1.

Figure 1. Most studies focus on significant difference at study endpoints, average treatment versus average control outcomes, distance a. The better measure of condition effectiveness is the relative improvement from the start of the study, b versus c. The improvement of the control group is nearly 80% of the treatment group by study’s end. So, for non-life threatening maladies, why treat when 80% of the relief is accomplished with the control group, which is usually much safer and cheaper. The “natural history” control group is shown to illustrate the large degree to which time heals most wounds.

Figure 1 displays common results from SM research on acute non-specific – or non-pathologic – low back pain (LBP). Notice the strong natural history trajectory of recovery without intervention (natural history). As a percentage of recovery, the passage of time is the prime driver. In my meta-analysis, 97% of the recovery from acute pain could be attributed to the passage of time,5 so the relative proportions in Figure 1 are very close to reality.

Ceteris paribus is the foundation of clinical and basic sciences. It means “all else held equal.” To give a valid evaluation of treatment effectiveness, an experiment must control the extraneous factors. Ideally, all incidental, or non-specific, factors not directly related to treatment must be isolated from the treatment encounter to observe any true treatment effect.

This method only works, however, if the treatment is at its core a unitary construct that exists apart from the complex and chaotic clinical experience. Chiropractic researchers have claimed SM, their chiropractic adjustment, as their treatment that distinguishes them from other therapies.

However, chiropractic, other alternative therapies, and many medical ones, – do not have an unchangeable core “engine” that is alone responsible for clinical change. Especially chiropractic and alternative therapies may be classified as emergent rather than latent constructs. Chiropractic treatment consists of an entire ensemble of possible causal agents such as professional office, waiting room, white coat, wall color, receptionist, confident provider, attributed credibility, and the patient perception and trust. As such, chiropractic and other alternative medicines cannot be distilled to isolate and identify their “active ingredient.”

With an emergent treatment, attempts to isolate any therapeutic “active ingredient,” are only frustrating because putting aside the seemingly ineffective factors makes the treatment effect disappear. After decades in chiropractic research, I concluded that the chiropractic profession will persist into the near future, but not because of research evidence. Fancier statistics won’t overpower the lack of clinical outcomes. For instance, a 2018 study by Goertz et al. in the Journal of the American Medical Association (JAMA)6 failed to report effect sizes, confidence intervals, and coefficients of variation.

Chiropractic and Clinical Trials

The other methodology unfriendly to chiropractic research is the pharmaceutical randomized clinical trial. This method assumes that the treatments can be standardized as indistinguishable between a real treatment and a placebo pill and that patients all have the same diagnosis. This is not possible with chiropractic patients. As Brooks’s osteopathic text shows,7 tracing a disability or dysfunction to its antecedents to restore health is highly complex, individualized, and somewhat ritualized. There simply is no standard patient nor any standard treatment. There is no possibility of double-blinding because the patient knows when treatment is delivered. There is not even the possibility of single-blinding as the deliverer of SM cannot be blinded from his own intentions. A case can be made that no further clinical chiropractic research should be performed as it will only generate more of the same confusion as it has in the past 50 years, without identifying any truly effective method of treatment.8

Even with “all else equal,” ceteris paribus, treatment and control groups may also be equally effective. This is demonstrated when a meta-analysis shows half of two-armed trials demonstrate treatment effectiveness and the other half do not. Then, “more research is needed” will not solve the irreplicability. This is an exercise in futility that may keep chiropractic college enrollments topped up and practicing chiropractors motivated. Chiropractic research thus becomes marketing.

Conclusion

Tantalizing positive results flashing off and on across studies do not mean that a treatment works sometimes but is better than nothing. Rather, such flashes are but an artifact of method (or the lack of a good method); and “better than nothing” really means “nothing.” Instead, it is necessary to estimate the probability of improvement for both treatment and control conditions. If the two estimates are close, then one should, for simplicity and economy, simply choose the control condition as the preferred treatment.

In the end, chiropractic, other alternative medicines, and other irreplicable experiments may be good art but not good science. At their best, chiropractic and other forms of bodywork follow muscles and joints to offer relief from a disabling pain or dysfunction according to a patient’s very specific needs.9 Though chiropractors have placed a premium on scientific credibility and sponsor international research conferences each year, the profession has little to show for the expense and effort. ![]()

About the Author

Michael Menke was a chiropractor and chiropractic researcher in Northern California for 20 years. He is now a methodologist and measurement expert in health economics and outcomes research in the US, and formerly head of medical psychology in Kuala Lumpur, Malaysia. His research includes evaluating effective smoking cessation programs, gestational diabetes, accuracy of disease screening, traumatic brain injury, colorectal cancer, accuracy of salivary biomarkers, early detection of Parkinson’s disease, and low back pain. His PhD is from University of Arizona Department of Psychology and School of Pharmacy.

References

- https://bit.ly/3sKHXBR

- https://go.nature.com/3Ubu7nE

- https://bit.ly/3zxGdQf

- https://bit.ly/3fvoxO6

- https://bit.ly/3FyxCAt

- https://bit.ly/3SZo1Wh

- Brooks, W.J. (2022). A Treatise on the Functional Pathology of the Musculosketal System. Gatekeeper Press.

- https://bit.ly/3zxinnD

- Nirenberg, D. & Nirenberg, R.L. (2021). Uncountable: A Philosophical History of Number and Humanity from Antquity to the Present. The University of Chicago Press.

This article was published on November 7, 2022.