Metaphors & Mindsets:

How to ‘Update’ Beliefs

The word “metaphor” derives from the Greek words “meta” (“change of place, order, condition, or nature”) and “phor” (to carry or transfer) and in common usage means to carry or transfer you from one idea that is difficult to understand to another idea that is easier to grasp. Many subjects in science are notoriously incomprehensible and so the use of metaphors is essential. The Newtonian “mechanical universe” metaphor, for example, carries us from the difficult idea of gravity and its spooky action at a distance to the more understandable “clockwork universe” of gears and wheels. In turn, this metaphor was employed by Enlightenment thinkers to explain the workings of everything from the human body with its levers and pullies (joints, tendons, muscles) to the workings of political systems (with the king as the sun and his subjects as planets circling about him) and even economies — François Quesnay modeled the French economy after the human body, in which money flows through a nation like blood flows through a body, and ruinous government policies were like diseases that impeded economic health, leading him to propose laissez faire as a government policy.

The workings of the human mind are especially enigmatic, so scientists have invoked metaphors such as hydraulic mechanisms, electrical wires, logic circuits, computer networks, software programs, and information workspaces. Nobel laureate psychologist Daniel Kahneman famously invoked the metaphor of thinking as a dual system: fast and intuitive versus slow and rational. In The Scout Mindset Julia Galef, cofounder of the Center for Applied Rationality and host of the popular podcast Rationally Speaking, quite effectively uses the metaphor of mindsets — that of scouts and soldiers. The soldier mindset leads us to defend our beliefs against outside threats, seek out and find evidence to support our beliefs and ignore or rationalize away counterevidence, and resist admitting we’re wrong as that feels like defeat. The scout mindset, by contrast, seeks to discover CONTINUE READING THIS POST…

TAGS: belief, changing mind, cognition, evidence, human behavior, metaphor, psychology, reason, science, thinking, truthThe Skeptic’s Chaplain:

Richard Dawkins as a Fountainhead of Skepticism

Over the weekend of august 12–14, 2001, I participated in an event entitled “Humanity 3000,” whose mission it was to bring together “prominent thinkers from around the world in a multidisciplinary framework to ponder issues that are most likely to have a significant impact on the long-term future of humanity.” Sponsored by the Foundation for the Future—a nonprofit think tank in Seattle founded by aerospace engineer and benefactor Walter P. Kistler—long-term is defined as a millennium. We were tasked with the job of prognosticating what the world will be like in the year 3000.

Yeah, sure. As Yogi Berra said, “It’s tough to make predictions, especially about the future.” If such a workshop were held in 1950 would anyone have anticipated the World Wide Web? If we cannot prognosticate fifty years in the future, what chance do we have of saying anything significant about a date 20 times more distant? And please note the date of this conference—needless to say, not one of us realized that we were a month away from the event that would redefine the modern world with a date that will live in infamy. It was a fool’s invitation, which I accepted with relish. Who could resist sitting around a room talking about the most interesting questions of our time, and possibly future times, with a bunch of really smart and interesting people. To name but a few with whom I shared beliefs and beer: science writer Ronald Bailey, environmentalist Connie Barlow, twin research expert Thomas Bouchard, brain specialist William Calvin, educational psychologist Arthur Jensen, mathematician and critic Norman Levitt, memory expert Elizabeth Loftus, evolutionary biologist Edward O. Wilson, and CONTINUE READING THIS POST…

TAGS: belief, evolution, evolution and/or creationism, pseudoscience, religion, Richard Dawkins, science, scientific skepticism, selfish gene, tributeAfrocentric Pseudoscience & Pseudohistory

Replicating Milgram:

A Study on Why People Commit Evil Deeds

In recent discussions about the “replication crisis” in science, in addition to a large percentage of famous psychology experiments failing to replicate, suggestions have also been made that some classic psychology experiments could not be conducted today due to ethical or practical concerns, the most notable being that of Stanley Milgram’s famous shock experiments. In fact, in 2010, Dr. Michael Shermer, working with Chris Hansen and Dateline NBC producers, replicated a number of classic psychology experiments, including Milgram. What follows is a summary of that research, from Chapter 9, Moral Regress, in Dr. Shermer’s book The Moral Arc, along with the two-part episode from the Dateline NBC show, called “What Were You Thinking?”

In 2010, I worked on a Dateline NBC two-hour television special in which we replicated a number of now classic psychology experiments, including that of Yale University professor Stanley Milgram’s famous shock experiments from the early 1960s on the nature of evil. Here we provide links to the two-part segment television episodes of our replication of Milgram.

In public talks in which I screen these videos I am occasionally asked how we got this replication passed by an Institutional Review Board (an IRB), which is required for scientific research, inasmuch as such experiments could never be conducted today. We didn’t. This was for network television, not an academic laboratory, so the equivalent of an IRB was review by NBC’s legal department, which approved it. This seems to surprise — even shock — many academics, until I remind them of what people do to one another on reality television programs in which subjects are stranded on remote islands or thick jungles and left to fend for themselves — sometimes naked and afraid — in various contrivances that resemble CONTINUE READING THIS POST…

TAGS: Adolf Eichmann, agentic state, disobedience to authority, evil, free will, human behavior, moral reasoning, morality, obedience to authority, power of authority, punishment, shock experiment, Stanley Milgram, study replicationSuffrage & Success:

Celebrating the Centennial of Women’s Right to Vote

Today, August 18, marks the 100th anniversary of the adoption of the 19th Amendment to the Constitution of the United States, guaranteeing women the right to vote. We honor that momentous event with an excerpt adapted from the chapter on women’s rights in Dr. Michael Shermer’s 2015 book The Moral Arc: How Science and Reason Lead Humanity Toward Truth, Justice, and Freedom (New York: Henry Holt).

Read the essay below, or listen to it being read by the author, Michael Shermer:

On August 18, 1920, the 19th Amendment of the United States Constitution was ratified, legally securing the franchise to women. It was the culmination of a 72-year battle that began when Elizabeth Cady Stanton and Lucretia Mott organized the 1848 Seneca Falls conference, after attending the World Anti-slavery Convention in London in 1840 — a meeting at which they had come to participate as delegates, but at which they were not allowed to speak and were made to sit like obedient children in a curtained-off area. This did not sit well with Stanton and Mott. Conventions were held throughout the 1850s but were interrupted by the American Civil War, which secured the franchise in 1870 — not for women, of course, but for black men (though they were gradually disenfranchised by poll taxes, legal loopholes, literacy tests, threats and intimidation). This didn’t sit well either and only served to energize the likes of Matilda Joslyn Gage, Susan B. Anthony, Ida B. Wells, Carrie Chapman Catt, Doris Stevens, and countless others who campaigned unremittingly against the political slavery of women.

Things began to heat up when the great American suffragist Alice Paul (arrestingly portrayed by Hilary Swank in the 2004 film Iron Jawed Angels) returned from a lengthy sojourn in England. She had learned much during her time there through her active participation in the British suffrage movement and from the more radical and militant British suffragists, including the courageous political activist Emmeline Pankhurst, characterized as “the very edge of that weapon of willpower by which British women freed themselves from being classed with children and idiots in the matter of exercising the franchise.”1

Upon her death Pankhurst was heralded by the New York Times as “the most remarkable political and social agitator of the early part of the twentieth century and the supreme protagonist of the campaign for the electoral enfranchisement of women”;2 years later, Time magazine voted her one of the 100 most important people of the century. Thus, when Alice Paul returned from abroad she was ready for action, though the more conservative members of the women’s movement weren’t quite ready for Alice. Nevertheless, in order to attract attention to the cause she and Lucy Burns organized the largest parade ever held in Washington. On March 3, 1913 (strategically timed for the day before President Wilson’s inauguration), 26 floats, 10 bands, and 8,000 women marched, led by the stunning Inez Milholland wearing a flowing white cape and riding a white horse. (See Figure 1 above.) Upwards of 100,000 spectators watched the parade but the mostly male crowd became increasingly unruly and the women were spat upon, taunted, harassed and attacked while the police stood by. Afraid of an all-out riot, the War Department called in the cavalry CONTINUE READING THIS POST…

TAGS: 19th amendment, moral progress, morality, voting rights, women's rights, women’s suffrageFat Man & Little Boy

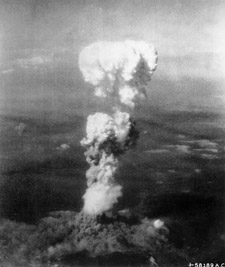

On the 75th anniversary of nuclear weapons, Dr. Michael Shermer presents a moral case for their use in ending WWII and the deterrence of Great Power wars since, and a call to eventually eliminate them. This essay was excerpted, in part, from Michael Shermer‘s book, The Moral Arc, in the chapter on war.

Read the essay below, or listen to it being read by the author, Michael Shermer:

On August 6, 1945 the Little Boy gun-type uranium-235 bomb exploded with an energy equivalent of 16–18 kilotons of TNT, flattening 69 percent of Hiroshima’s buildings and killing an estimated 80,000 people and injuring another 70,000.

Three quarters of a century ago this summer, nuclear weapons altered our civilization forever. On July 16 the Trinity plutonium bomb detonated with the energy equivalent of 22 kilotons (22,000 metric tons) of TNT, sending a mushroom cloud 39,000 feet into the atmosphere. The explosion left a crater 76 meters wide filled with radioactive glass called trinitite (melted quartz grained sand). It could be heard as far away as El Paso, Texas. On August 6 the Little Boy gun-type uranium-235 bomb exploded with an energy equivalent of 16–18 kilotons of TNT, flattening 69 percent of Hiroshima’s buildings and killing an estimated 80,000 people and injuring another 70,000. On August 9 the Fat Man plutonium implosion-type bomb with the energy equivalence of 19-23 kilotons of TNT leveled around 44 percent of Nagasaki, killing an estimated CONTINUE READING THIS POST…

TAGS: atomic bomb, deterrence, mass murder, morality, national security, nuclear deterrence, nuclear war, nuclear weapons, nuclear zero, war, WWIIWhy People Believe Conspiracy Theories

What is a conspiracy, and how does it differ from a conspiracy theory? Michael Shermer explains who believes conspiracy theories and why they believe them in the following essay, derived from Lecture 1 of his 12-lecture Audible Original course titled “Conspiracies and Conspiracy Theories: What We Should Believe and Why.”

On Friday, March 15, 2019 a 28-year old Australian man wielding five firearms stormed two mosques in Christchurch, New Zealand, and opened fire, killing 50 people and wounding dozens more. It was the worst mass public shooting in the history of that country, prompting Prime Minister Jacinda Ardern to reflect: “While the nation grapples with a form of grief and anger that we have not experienced before, we are seeking answers.”

One answer may be found in the shooter’s rambling 74-page manifesto titled The Great Replacement, apparently inspired by a book of the same title by the French author Renaud Camus. The Great Replacement is a right wing conspiracy theory that claims that white Christian Europeans are being systematically replaced by people of non- European descent, most notably from North Africa, Sub-Saharan Africa, and the Arab Middle East, through immigration and higher birth rates.

The New Zealand killer’s name is Brenton Harrison Tarrant and his manifesto is filled with white supremacist tropes focused on this conspiracy theory, starting with his opening sentence “It’s the birthrates” repeated three times. “If there is one thing I want you to remember from these writings, it’s that the birthrates must change,” Tarrant insists. “Even if we were to deport all Non-Europeans from our lands tomorrow, the European people would still be spiraling into decay and eventual death.” Tarrant then cites the replacement fertility level of 2.06 births per woman, complaining that “not a single Western country, not a single white nation,” reaches this level. The result, he concludes, is “white genocide.”

This is classic 19th century blood-and-soil romanticism, and the self-described “Ethno-nationalist” Tarrant writes that he went on this murderous spree “to ensure the existence of our people and a future for white children, whilst preserving and exulting nature and the natural order.” His screed goes on and on like this, culminating in a photo collage of CONTINUE READING THIS POST…

TAGS: agenticity, belief, Bilderberg Group, cognitive bias, cognitive dissonance, confirmation bias, conspiracies, conspiracy theories, hindsight bias, JFK, Knights Templar, patternicity, Rothschilds, Zionist Occupation GovernmentThe Moral Arc: How Thinking Like a Scientist Makes the World More Moral

In this, the final lecture of his Chapman University Skepticism 101 course, Dr. Michael Shermer pulls back to take a bigger picture look at what science and reason have done for humanity in the realm of moral progress. That is, applying the methods of science and principles of reason since the Scientific Revolution in the 17th century has solved not only problems in the physical and biological/medical fields, but in social and moral realms as well. How should we structure societies so that more people flourish in more places more of the time? Science can answer that question, and it has for centuries. Learning how to think like a scientist can make the world a better place, as Dr. Shermer explains in this lecture based on his 2015 book, The Moral Arc.

Shermer’s Chapman University course, Skepticism 101: How to Think Like a Scientist, covers a wide range of topics, from critical thinking, reasoning, rationality, free speech, cognitive biases and how thinking goes wrong, and the scientific methods, to actual claims and whether or not there is any truth to them, e.g., ESP, ETIs, UFOs, astrology, channelling, the Bermuda Triangle, psychics, evolution, creationism, Holocaust denial, and especially conspiracy theories and how to think about them.

MISSED A PREVIOUS LECTURE?

Watch the entire 15-lecture Chapman University Skepticism 101 series for free!

Learn how to think like a scientist! Click the button below to browse through the entire course lecture series 1 through 15, and watch all lectures that interest you, for free!

Watch all 15 lectures for free

TAGS: age of reason, better angels, death penalty, enlightenment, enlightenment humanism, freedom, gay rights, human rights, justice, Moral Arc, moral progress, morality, murder, nuclear deterrence, nuclear weapons, same-sex marriage, science and reason, scientific revolution, self-control, skepticism 101 lectures, terrorism, truth, violenceWhat is Truth, Anyway?

In this lecture Dr. Michael Shermer addresses one of the deepest questions of all: what is truth? How do we know what is true, untrue, or uncertain? Given that none of us are omniscient, all claims to knowledge carry a certain level of uncertainty. Given that fact, how can we determine what is true? Included: subjective/internal vs. objective/external truths, Hume’s theory of causality, correlation and causation, the principle of proportionality (or why extraordinary claims require extraordinary evidence), how to think about miracles and the resurrection, mysterian mysteries, post-truth, rational irrationalities, the man who saved the world, Bayesian reasoning, and why love depends on evidence.

Skepticism 101: How to Think Like a Scientist covers a wide range of topics, from critical thinking, reasoning, rationality, cognitive biases and how thinking goes wrong, and the scientific methods, to actual claims and whether or not there is any truth to them, e.g., ESP, ETIs, UFOs, astrology, channelling, psychics, creationism, Holocaust denial, and especially conspiracy theories and how to think about them.

If you missed Dr. Shermer’s previous Skepticism 101 lectures watch them now.

TAGS: Bayesian reasoning, correlation and causation, love, miracles, post-truth, principle of proportionality, rational irrationalities, skepticism, skepticism 101 lectures, subjective/objective truths, theory of causality, truthThe Truth About Post-Truth Truthiness

Is post-truth the political subordination of reality? Is truth itself any more under threat today that in the past? Have the populists & postmodernists won the day? In response to Dr. Lee McIntyre’s essay, Dr. Michael Shermer asserts that people are not nearly as gullible as some believe.

Words embody ideas, and their changing usage and meaning are tracked by lexicographers in dictionaries, which therein become barometers of cultural trends. In 2006, for example, the American Dialect Society and Merriam-Webster’s both chose as their word of the year the neologism “truthiness”, introduced by the comedian Stephen Colbert on the premiere episode of his satirical mock news show The Colbert Report (on which I appeared twice1), meaning “the truth we want to exist.”2 It was a prescient comedic bit as a decade later three examples of truthiness entered our lexicon.

After Donald Trump’s Presidential inauguration on January 20, 2017, his special counselor Kellyanne Conway concocted the term “alternative facts” during a Meet the Press interview while defending White House Press Secretary Sean Spicer’s inaccurate statement about the size of the crowd that day. “Our press secretary, Sean Spicer, gave alternative facts to that [the inaugural crowd size], but the point remains that….” at which time NBC correspondent Chuck Todd cut her off: “Wait a minute. Alternative facts? … Alternative facts are not facts. They’re falsehoods.”3 German linguists deemed it the “un-word of the year” (Unwort des Jahres) for 2017. Later that year the related term “fake news” became common parlance, leaping in usage 365 percent and landing it on the “word of the year shortlist” of Collins Dictionary, which defined it as “false, often sensational, information disseminated under the guise of news reporting.”4

Such words (or un-words) are often invoked as evidence that we are living in a “post-truth” era brought on by Donald Trump (according to liberals) or by postmodernism (according to conservatives). Are we living in a post-truth world of truthiness, fake news, and alternative facts? Have the populists and postmodernists won the day? CONTINUE READING THIS POST…

TAGS: alternative facts, conspiracy theories, Donald Trump, epistemology, fake news, fascism, human delusion, politics, post-truth, postmodernism, progress, propaganda, reason, science, spindoctoring, truthIs Freedom of Speech Harmful for College Students?

In this lecture, Dr. Michael Shermer addresses the growing crisis of free speech in college and culture at large, triggered as it was by the title lecture, which he was tasked to deliver to students at California State University, Fullerton, after a campus paroxysm erupted over “Taco Tuesday,” in which students accused other students of “cultural appropriation” for non-Mexicans appropriating Mexican food from Mexicans, which if you’ve ever been to Southern California becomes absurd on the face of it inasmuch as Mexican cuisine is among the most popular dining options. From there Shermer reviews the history of free speech, the difference between government censorship and private censorship, the causes of the current crisis, and what we can do about it.

Skepticism 101: How to Think Like a Scientist covers a wide range of topics, from critical thinking, reasoning, rationality, cognitive biases and how thinking goes wrong, and the scientific methods, to actual claims and whether or not there is any truth to them, e.g., ESP, ETIs, UFOs, astrology, channelling, psychics, creationism, Holocaust denial, and especially conspiracy theories and how to think about them.

If you missed Dr. Shermer’s previous Skepticism 101 lectures watch them now.

TAGS: censorship, civil liberty, constitution, deplatforming, diversity, feminism, First Amendment rights, free speech, freedom, freedom of inquiry, government censorship, hate speech, microaggressions, Milo Yiannopoulos, private censorship, rights, safe spaces, skepticism 101 lectures, trigger warnings, victimhood, virtue signalingWhat are Science & Skepticism?

This lecture, traditionally the first in the series for the Skepticism 101 course, is based on the first couple of chapters from Dr. Michael Shermer’s first book, Why People Believe Weird Things, presenting a description of skepticism and science and how they work, along with a discussion of the difference between science and pseudoscience, and some very practical applications of how to test claims and evaluate evidence. The image for this lecture is the original oil painting for the first cover of Why People Believe Weird Things, commissioned by the publisher and painted by the artist Lawrence Berzon.

Skepticism 101: How to Think Like a Scientist covers a wide range of topics, from critical thinking, reasoning, rationality, cognitive biases and how thinking goes wrong, and the scientific methods, to actual claims and whether or not there is any truth to them, e.g., ESP, ETIs, UFOs, astrology, channelling, psychics, creationism, Holocaust denial, and especially conspiracy theories and how to think about them.

The audio is out of sync with the video in “What is a Skeptic?” Here’s the link to view it. If you missed Dr. Shermer’s previous Skepticism 101 lectures watch them now.

TAGS: belief, natural phenomena, pseudoscience, science, skepticism, skepticism 101 lectures, weird thingsEvolution & Creationism, Part 2: Who says evolution never happened, why do they say it, and what do they claim?

Dr. Michael Shermer continues the discussion of evolution and creationism, focusing on the history of the creationism movement and the four stages it has gone through: (1) Banning the teaching of evolution, (2) Demanding equal time for Genesis and Darwin, (3) Demanding equal time for creation-science and evolution-science, and (4) Intelligent Design theory. Shermer provides the legal, cultural, and political context for how and why creationism evolved over the 150 years since Darwin published On the Origin of Species in 1859, thereby providing a naturalistic account of life, ultimately displacing the creationist supernatural account. Finally, Shermer reviews the best arguments made by creationists and why they’re wrong.

Skepticism 101: How to Think Like a Scientist covers a wide range of topics, from critical thinking, reasoning, rationality, cognitive biases and how thinking goes wrong, and the scientific methods, to actual claims and whether or not there is any truth to them, e.g., ESP, ETIs, UFOs, astrology, channelling, psychics, creationism, Holocaust denial, and especially conspiracy theories and how to think about them.

If you missed Dr. Shermer’s previous Skepticism 101 lectures watch them now.

TAGS: Charles Darwin, creationism, evolutionary theory, Intelligent Design Theory, origin of species, science, Scopes Trial, skepticism 101 lecturesWicked Games:

Lance Armstrong, Forgiveness and Redemption, and a Game Theory of Doping

Part 2 of the documentary film “Lance” airs tonight on ESPN and served as a catalyst for this article that employs game theory to understand why athletes dope even when they don’t want to, as well as thoughts on forgiveness and redemption. The article is a follow up to and extension of Dr. Shermer’s article in the April 2008 issue of Scientific American.

All images within are screenshots from Marina Zenovich’s 3 hour and 22 minute film and are courtesy of ESPN, who provided a press screener. In appreciation. Zenovich also produced Robin Williams: Come Inside My Mind (2018), Water & Power: A California Heist (2017), Richard Pryor: Omit the Logic (2013), and Roman Polanski: Odd Man Out (2012).

Toward the end of Marina Zenovich’s riveting documentary film on Lance Armstrong, titled simply Lance and broadcast on ESPN May 24 and May 31, 2020, the former 7-time Tour de France champion grouses about the apparent ethical hypocrisy of why TdF champions like the German Jan Ullrich (1997), the Italian Marco Pantani (1998), and himself (1999–2005) were utterly disgraced and had their lives ruined because of their doping, whereas cyclists such as the German Eric Zabel, the Italian Ivan Basso, and the American George Hincapie are “idolized, glorified, given jobs, invited to races, put on TV” even though they’re “no different from us” inasmuch as they doped as well. Of Pantani, whom Armstrong famously battled up many a mountainous climb, Lance scowls that “they disgrace Marco Pantani, they destroy him in the press, they kick him out of the sport, and he’s dead. He’s fucking dead!” Ditto Ullrich. “They disgrace, they destroy, and they fucking ruin Jan Ullrich’s life. Why? … That’s why I went. Because that’s fucking bullshit.”

This invective, in fact, comes on the heels of the most touching moment of the nearly 3.5-hour film, in which a lachrymose Armstrong loses his tough-guy composure when asked why he spontaneously flew to Europe to support his former rival in his time of need. Ullrich’s life was unraveling after a series of incidents involving drugs, alcohol, and violence, and Armstrong’s emotional fracture in recalling it is so out of character from his public image that it may give even his most cynical critics pause. His answer? CONTINUE READING THIS POST…

TAGS: cheating, competition, cycling, doping, drugs in sports, ethics, game theory, Lance Armstrong, Nash Equilibrium, performance enhancing drugs, restorative justice, retributive justiceEvolution & Creationism, Part 1

Dr. Michael Shermer takes viewers to the Galápagos Islands to retrace Darwin’s footsteps (literally — in 2006 Shermer and historian of science Frank Sulloway hiked and camped all over the first island Darwin visited) and show that, in fact, Darwin did not discover natural selection when he was there in September of 1835. He worked out his theory when he returned home, and Shermer shows exactly how Darwin did that, along with the story of the theory’s co-discoverer, Alfred Russel Wallace. Then Shermer outlines what, exactly, the theory of evolution explains, how it displaced the creationist model as the explanation for design in nature (wings, eyes, etc. as functional adaptations), and why so many people today still misunderstand the theory and how that sustained the creationist model.

Skepticism 101: How to Think Like a Scientist covers a wide range of topics, from critical thinking, reasoning, rationality, cognitive biases and how thinking goes wrong, and the scientific methods, to actual claims and whether or not there is any truth to them, e.g., ESP, ETIs, UFOs, astrology, channelling, psychics, creationism, Holocaust denial, and especially conspiracy theories and how to think about them.

If you missed Dr. Shermer’s previous Skepticism 101 lectures watch them now.

About the photograph above

Charles Darwin described of what he called the “craterized district” on San Cristóbal, Galápagos Islands thusly:

The entire surface of this part of the island seems to have been permeated, like a sieve, by the subterranean vapours: here and there the lava, whilst soft, has been blown into great bubbles; and in other parts, the tops of caverns similarly have fallen in, leaving circular pits with steep sides. From the regular form of the many craters, they gave to the country an artificial appearance, which vividly reminded me of those parts of Staffordshire, where the great iron-foundries are most numerous.

The photograph was taken on 21 June 2004 by Dr. Frank Sulloway. Darwin hiked this area in September, 1835.

Mentioned in this lecture

TAGS: Alfred Russel Wallace, belief, Charles Darwin, creationism, evolution, Galápagos Islands, mythology, natural selection, origin of species, religion, science, skepticism 101 lecturesHolocaust Denial

In this lecture on Holocaust Denial, Dr. Michael Shermer employs the methods of science to history, showing how we can determine truth about the past. Many scholars in the humanities and social sciences do not consider history to be a science. Instead, they treat it as a field of competing narrative stories, no one of which has a superior claim to truth values than any others. But as Dr. Shermer replies to this assertion, are we to understand that those who assert that the Holocaust never happened have equal standing to those who assert that it did? Of course not! It is here where most cultural relativists get off the relativity train, acknowledging that, in fact, we can establish certain facts about the past, no less than we can about the present.

Skepticism 101: How to Think Like a Scientist covers a wide range of topics, from critical thinking, reasoning, rationality, cognitive biases and how thinking goes wrong, and the scientific methods, to actual claims and whether or not there is any truth to them, e.g., ESP, ETIs, UFOs, astrology, channelling, psychics, creationism, Holocaust denial, and especially conspiracy theories and how to think about them.

If you missed Dr. Shermer’s previous Skepticism 101 lectures watch them now.

Mentioned in this lecture

- Denying History (audio CD)

- Skeptic Magazine Vol. 2 No. 4 on Pseudohistory, Afrocentrism, and Holocaust Revisionism

- Skeptic Magazine Vol. 14 No. 3 on The New Revisionism

Pathways to Evil, Part 2

In Pathways to Evil, Part 2, Dr. Michael Shermer fleshes out the themes of Part 1 by exploring how the dials controlling our inner demons and better angels can be dialed up or down depending on circumstances and conditions. Are we all good apples but occasionally bad barrels turn good apples rotten, or do we all harbor the capacity to turn bad?

Skepticism 101: How to Think Like a Scientist covers a wide range of topics, from critical thinking, reasoning, rationality, cognitive biases and how thinking goes wrong, and the scientific methods, to actual claims and whether or not there is any truth to them, e.g., ESP, ETIs, UFOs, astrology, channelling, psychics, creationism, Holocaust denial, and especially conspiracy theories and how to think about them.

If you missed Dr. Shermer’s previous Skepticism 101 lectures watch them now.

TAGS: civilization, compliance and obedience, conformity, dehumanization, deindividuation, diffusion of responsibility, evil, genocide, ideology, Milgram's Shock Experiment, morality, murder, Nazi gradualism, pluralistic ignorance, skepticism 101 lectures, Zimbardo's Prison ExperimentPathways to Evil, Part 1

In Part 1 of his Pathways to Evil lecture Dr. Michael Shermer considers the nature of evil in his attempt to answer the question of how you can get normal civilized, educated, and intelligent people to commit murder and even genocide. Are we basically good and made bad by evil situations, or are we basically evil and made good by civilized society?

Skepticism 101: How to Think Like a Scientist covers a wide range of topics, from critical thinking, reasoning, rationality, cognitive biases and how thinking goes wrong, and the scientific methods, to actual claims and whether or not there is any truth to them, e.g., ESP, ETIs, UFOs, astrology, channelling, psychics, creationism, Holocaust denial, and especially conspiracy theories and how to think about them.

If you missed Dr. Shermer’s previous Skepticism 101 lectures watch them now.

TAGS: altruism, civilization, evil, genocide, Hitler, homicide, morality, murder, reciprocity, revenge, skepticism 101 lecturesHow to Think About the Bermuda Triangle

Dr. Michael Shermer examines the claims about the Bermuda Triangle using the tools of skepticism, science, and rationality to reveal that there is no mystery to explain. Selective reporting, false reporting, quote mining, anecdote chasing, and mystery mongering all conjoin to create what appears to be an unsolved mystery about the disappearance of planes and ships in this triangular shape region of the ocean. But when you examine each particular case, as did the U.S. Navy, Coast Guard, and especially insurance companies who have to pay out for such losses, it becomes clear that almost all have natural explanations, and the remaining unsolved ones are lying on the bottom of the ocean beyond our knowledge.

Skepticism 101: How to Think Like a Scientist covers a wide range of topics, from critical thinking, reasoning, rationality, cognitive biases and how thinking goes wrong, and the scientific methods, to actual claims and whether or not there is any truth to them, e.g., ESP, ETIs, UFOs, astrology, channelling, psychics, creationism, Holocaust denial, and especially conspiracy theories and how to think about them.

If you missed Dr. Shermer’s previous Skepticism 101 lectures watch them now.

TAGS: anecdote chasing, Bermuda triangle, false reporting, quote mining, rationailty, science, selective reporting, skepticism, skepticism 101 lectures, unsolved mysteryPolitics of Belief

Dr. Michael Shermer explains how we arrived at the Left-Right spectrum, both historically and evolutionarily, and the numerous metaphors used to wrap our minds around such complex systems as politics and economics. This Chapman University lecture is based on chapters in his books The Believing Brain, The Moral Arc, and Giving the Devil His Due, along with the books of Christian Sith, George Lakoff, Alan Fiske, Jonathan Haidt, Thomas Sowell, and Steven Pinker.

Skepticism 101: How to Think Like a Scientist covers a wide range of topics, from critical thinking, reasoning, rationality, cognitive biases and how thinking goes wrong, and the scientific methods, to actual claims and whether or not there is any truth to them, e.g., ESP, ETIs, UFOs, astrology, channelling, psychics, creationism, Holocaust denial, and especially conspiracy theories and how to think about them.

If you missed Dr. Shermer’s previous Skepticism 101 lectures watch them now.

Mentioned in this lecture

Order the following books by Michael Shermer upon which this lecture is based.

TAGS: authority, climate change, cognitive bias, conservatism, fairness, gun control, ingroup loyalty, left vs right, liberalism, moral dilemmas, political bias, politics, reciprocity, skepticism 101 lectures